Guest post by Elliott Murray—Principal Consultant at Infinity Works—a Pactflow consulting partner.

The Why and an Ode to Testing Past

As I’m what might be considered a veteran of the software industry, I remember reading J. B. Rainsberger’s somewhat controversial piece at the time piece regarding integrated tests are a scam. There is an early section where he discusses the code branches and number of integration tests you’d need to satisfy basic correctness. As history seems doomed to repeat itself I find myself facing similar arguments today as an advocate of Contract Based vs End to End (E2E) testing for distributed systems. It’s an argument I often blithely respond to by throwing out some comment about exponential vs linear behaviour knowing full well that if ever asked to prove this it would be as if the curtain was pulled back. So, as we have spent a year indoors, I found myself scratching this ‘itch’ to see if the above does in fact hold true.

Firstly though some disclaimers. There are a lot of other reasons for adopting either E2E or Contract Testing. I’m not considering those so if you feel your E2E test suite gives you value in other ways, I will not try to dissuade you of those here. But maybe these people will. I’m also the maintainer of Pact Python. I receive no direct financial benefit for this but obviously I have a bias towards Contract Testing. This is a reasonably theoretical and advanced topic. No coding is required, however a solid understanding of automated testing including Contract Testing, Continuous Integration(CI) Dev-ops and distributed architecture paradigms such as Function as a Service (FAAS) or Microservices is assumed. And you may need to dust down your high school maths and I’ll throw in a sprinkling of Graph Theory for good measure.

Defining the problem

I’ll start with a couple of assumptions to narrow the problem. Firstly I’m going to make the assumption that you want to have complete test coverage of each interaction in your system. That is, each contract has some automated test that will fail if a breaking change is introduced to either a consumer or provider. This proof is based on the consumption of compute resources which you might think of as servers. So the question I am essentially trying to answer is: when I make a change how many servers do I need to spin up to know if I have a broken contract in my system? And secondly, as we live in an era of powerful cloud compute services, I am going to mostly remove time from the equation. I do this by making the assertion that you can spin up as many servers as needed on a charge by use model. So in theory you can run infinitely in parallel for the same cost as running them sequentially. This is an oversimplification that plays in the favour of E2E tests performance as otherwise they will also pay a time penalty in addition to compute resources. This, I argue, more than compensates some potential spot pricing savings.

I’ll provide a proof that Contract testing in a running environment scales in constant time with complexity while E2E tests displays in the absolute best case scenario quadratic performance and drops off quickly from there. I’ll finish with substituting the variable of ‘compute’ with human effort around building tests, managing test set up and debugging to hopefully convince you this cost in E2E tests is found across multiple vectors.

Words matter

Here the terms Consumer to mean ‘client’ and Provider as ‘Server’. The reason for the different terminologies is that in todays’ systems clients can be servers and vice versa so this traditional terminology breaks down. I am going to analyze performance in systems with a number of Nodes (maybe you think of these as servers) and Connections (you can think of these as contracts) The higher the number of Nodes(N) and Connections(E) the more complex the system. Ultimately we want a function that minimizes the number of Test Nodes(Y) needed to test these systems or more formally:

I have used the somewhat overloaded term Test fixtures in this context to mean a logical group of tests and setup that can be executed in relation to one Contract. It’s a somewhat arbitrary grouping and hugely simplifying concept as it ignores a lot of branch analysis of code. This would affect E2E negatively by worsening it’s performance as it increases the cardinality of cases we have to consider. Actually a read of J.B. Rainsberger’s referenced article should convince you of this. However, this is already a long article and is maybe something I’ll explore further in a follow up article or talk.

A simple example

We have 2 nodes with one being solely a Consumer and one solely a Provider. There is one connection from Consumer to Provider.

Note: as collaborators can be circular this is in effect a directed graph, although not acyclic. So, while in this example it isn’t, it would be perfectly feasible to have a connection going from Provider 1 to Consumer 1.

System Complexity

So if we want to do a Contract Test how many test fixtures would we have? In Contract Testing you typically have a test fixture for the Consumer and a separate one for the Provider. The only artefact that links them is the shared published contract, which results in 2 test fixtures. Now it depends on the framework you use but in Pact they would each run on a CI agent (server). We don’t need anything else as we are just verifying a contract.

What about E2E tests? We require 2 nodes to run the whole environment. But also we need an Orchestrator to run this test. This again could be your CI server that has your test framework and tests installed and then calls the tests. That means we need 3 servers to run this simple E2E test.

So even in this simple case we can see that for E2E tests it consumes more Test Nodes yet we end up with less test fixtures.

One Consumer, Two Providers

Let’s add an extra provider for a slightly more complex example.

If we are doing contract testing how many test fixtures would we have? Well the consumer has two contracts it now has to maintain and each provider has to maintain one reciprocal relationship with the consumer. That will result in 4 test fixtures for Contract testing.

As each test group runs on just one server we can also say

What about for E2E tests? Well there are 2 connections, so 2 test fixtures i.e. there is a direct relationship with connections:

Each test group will require 3 node setups so that is 6 servers. But we also need to have our orchestrator.

We can generalize to:

Finding our best and worst case scenario

From the previous section we ended up with 2 formulas for how many nodes we would need for running a full Pact and Contract testing suite and the resulting test fixtures that result from this:

We can use some basic Graph theory to determine a best and worst case based on the complexity of our system under test. The simplest will be where each of our Consumers has exactly one Provider relationship (and we have a fully connected system). The most complex is where each Node acts as a Provider to every other Node. So as we have a directed connected graph where E is the number of edges we can then say:

You can read more here. Using substitution for contract testing we can define these performance metrics:

The minimum is linear. However, we can see that we see factorial behaviour as we approach a high number of relations. The counter to this is our test coverage rises in a one to one relationship with this.

While for E2E substituting the values for E from above we end up with:

Which while not truly exponential is clearly not good. Even for the simplest systems E2E performs similar to how Contract Testing does for the most complex. And E2E tests scale very poorly as the system becomes larger and more interconnected. In addition it’s test coverage does increase but at half the rate that Contract Testing does.

This leads us to determine the cost per test fixture. If we divide the overall cost by the number of Tests we end up with the cost per test which breaks down to:

That means adding a new connection or edge into a system with Contract testing is a constant additional cost to your overall cost. However E2E will scale with the number of Nodes, so for each new Node you add you will incur a cost of N+1 to effectively test it. You are in effect punished for growing the system.

Let’s expand this with some numbers to see how a moderately complex system might perform. Firstly let’s fix the size of our system and vary the density of connectivity. We have a best and worst case scenario but an average would also be helpful here. For that I am going to take 4 connections or edges as the average case. This is based on research into contracts on the Pact Broker Service. While certainly not a formal definition it is hopefully indicative of real world usage. So for just a 20 node architecture:

Which with a graph shows the significant difference in rate of growth:

We can see that in this example Contract Testing requires approximately a tenth of the compute resources yet provides twice the number of test fixtures which equates to our Cost formula.

What about where we fix the average number of edges but now change the number of nodes? Let’s work on our previous assumption of an average of 4 edges per Node.

And with a graph to help visualize that E2E tests display quadratic behaviour:

This explains why your big tech companies don’t do E2E testing as no amount of compute is going to get you through this problem. You are punished for the size and the connectivity of your distributed system when these are often the two things you typically want to scale.

Optimizing our testing

So far our hypothesis has been on testing the whole system, however, this is generally not how production systems have changes deployed. We can have a test strategy that focuses on testing just the deltas that changed and their dependencies. How does this affect the relevant performance of both approaches?

Batch testing in E2E

A common optimization for E2E tests is to group our tests together. We could group our fixtures and have one fixture actually test more than one relationship. Let’s say into α batches. I am going to make a couple of simplifying assumptions for now. They are that these batches are perfectly balanced (so tests are evenly distributed between batches) and every edge has all it’s tests in one batch. On a change we only run one of those batches. We then end up with these formulas:

So potentially in the 20 node example above if we had 10 batches that would reduce our average case number of servers we’d need down from 1,680 to 168 depending on the sampling of your batches. However, and significantly this doesn’t actually affect our cost per test as we also have to divide our number of fixtures by this same α batches effectively cancelling them out (so 380 would become 38):

Eventually reduces back to:

So we can reduce the effort in checking for one delta of our system. However, it doesn’t reduce the cost of each test we have to run. This becomes more significant when we look at things such as test setup and debugging which require human effort. And it should be noted our simplifying assumptions are incredibly complex problems to solve. To maintain the invariant of perfectly balanced batches with no cross cutting fixtures would mean every time we add a new node or edge we would have to rebalance all our tests. This is non trivial so ultimately we end up with unbalanced batches with contracts overlapping batches. This results in redundant tests and non deterministic cost for testing a change. And in the case where we have a delta on a highly connected node (e.g. an authentication service) we will still have to run nearly the full suite to be sure and remove any saving.

Can we make some similar optimizations for Contract testing where there is a single delta?

A more complex interconnected example

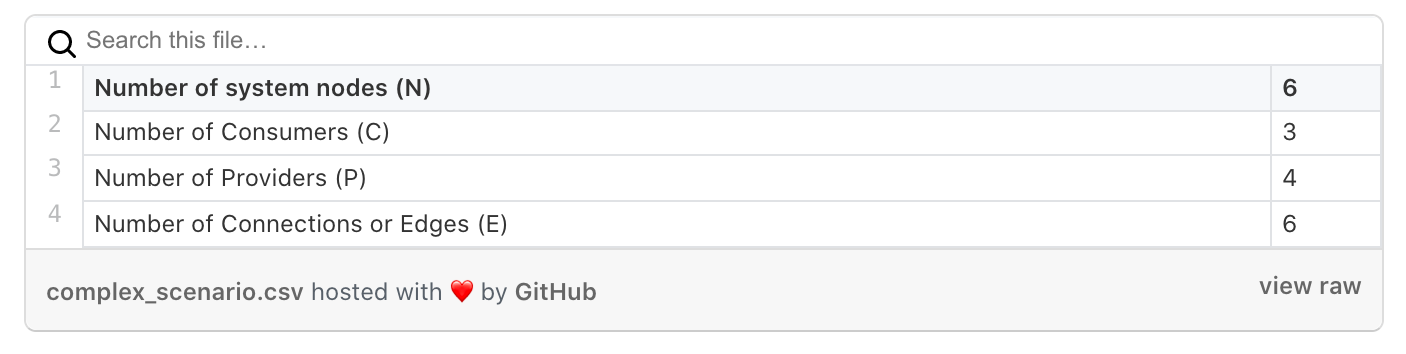

In this example we have an extra layer. Provider 1 now has 2 consumers but it also has a downstream collaborator Provider 4. Also if you look closely you will see there are Nodes that do not have contracts with each other which is what we would expect from a Microservices architecture.

Let us assume we made a change to Provider 1. Previously we determined that to run a full test of all our contracts it would cost:

This means our cost Y would be 12. However, let’s look a little closer and highlight what actually needs testing:

Provider 1 in green has to test it honours the contract still made with Consumer’s 1 and 2. It may need to publish a new contract with Provider 4 which in turn has to check that it still honours the contract with Provider 1. So in this scenario we have 3 nodes with a single test fixture and one node needs to run 3 test fixtures itself (This in itself is actually a slightly worse case scenario because it is actually possible to detect no changes to those contracts meaning only Provider 1 would need to be strictly invoked) We do not need to check any of the relationships involving Provider 2 or 3.

Can we generalize this? We have N number of Nodes and for any Node between 0 and N will have an index i. We can then derive this:

An interesting observation from this equation is that our Contract Testing effort is no longer dependant on the size of our architecture but the density of connections. What are our min and max values of E for this Node i?

Minimum is 1 as in it has 1 direct contract. And the max, using our centralised authentication service example again, must equal the number of Nodes N minus itself. So:

Gives us:

In our absolute worst case of changing our centralised authentication service to test a change with Contract testing will require as many servers as we have Collaborators (Microservices) We now have a linear property to our testing. Further we can naively predict an average using the average of edges per node:

And in most cases you will find this to be just a few interactions. In fact it will tend towards our average number of connections:

So for our 20 node example with on average 4 edges per node (so 80 overall) we would require just 5 Nodes to test the change and could be very confident about whether there were regressions or not.

Using measurements other than Compute

Now it may have occurred to you that there are some advanced techniques to reduce the costs of E2E tests. We looked at batching but I’m sure with a bit of thought applied to your system you could come up with some other creative ways to game the numbers. While I’m convinced you’ll not achieve deterministic and linear performance let’s briefly apply this thinking to some other costs in running E2E tests and see if the same logic still holds true.

Firstly there is the effort in writing the tests themselves and understanding how many setups we have to do. By setups I mean things like seeding data and preparing the system. This is easy to see how our theories hold true still. If we add one node with one connection we will with Contract Tests just have two tests to write. But for E2E we will have to consider setting up every service in our system plus this new one.

Let’s suppose we have an error. How long will it take us to find that error from our test output? Well in Contract Testing it has to be in the Node that has changed or one of it’s Providers or Consumers. This is our

holding true. Whereas for End to End we have to consider all the nodes in the system. And in fact also there may be an issue with the test or orchestrator itself so there is our plus one. In fact I think if you readJ.B. Rainsberger’s article I mentioned at the beginning you’ll realize debugging is even worse as you have multiple code paths to consider in a multiplicative fashion. Previously I’ve grouped all the tests you might have between a Consumer and Provider as one test fixture but in reality there are multiple paths through that. Contract testing can scale with this just slightly worse than linear but E2E tests will tend to exponential behaviour.

Conclusion

So when I started this mental exercise I already implicitly knew that E2E scaled poorly. I knew from personal experience it’s hard and error prone. Even with a lot of theoretical ‘best case’ scenarios applied here you will not beat quadratic performance in relation to the size of your system. And, while you might be able to game one metric, hopefully I’ve convinced you that you will simply pay that cost elsewhere. It’s fairly obvious now why E2E tests become practically impossible at a certain threshold of size and complexity.

I’ve seen a lot of people reject tools like Pact because it is a difficult concept to pick up. But I have also seen that when people overcome that and become ardent supporters as ‘it makes sense’. I feel the maths support and explain this feeling. Our running cost for a change being:

means it scales with your architecture.

An important takeaway for me was that the cost of testing your contracts doesn’t depend on the size of your system.

This is a heuristic that is blindingly obvious when you step back and think about it. It is why this technique scales so well in larger and more complex environments. At the same time we are able to achieve over twice the code coverage of E2E tests best case scenario. These combined mean we can find and respond to failures more quickly and precisely.

This blog post was authored by Elliott Murray and originally published on Medium.

Got feedback for us? We'd love to hear from you. Simply drop us a line at hello@pactflow.io or follow us on Twitter.