Introduction

This is the second instalment of a three part series on schema testing. In part one of the series, we discussed the differences between schema testing, specifications and contract testing, and highlighted the trade-offs of each.

In this post, we'll review the most popular approaches to schema testing

Schema testing strategies

Let's briefly discuss the 3 most common approaches to testing with schemas. We will use a JSON Schema for a fictional products API to illustrate things, but this approach should work more generally for other schemas such as OAS, GraphQL, Protobufs, Avro and so on.

1. Code generation

This approach is the simplest to implement and involves inferring schemas from statically typed languages such as TypeScript, Java or Golang.

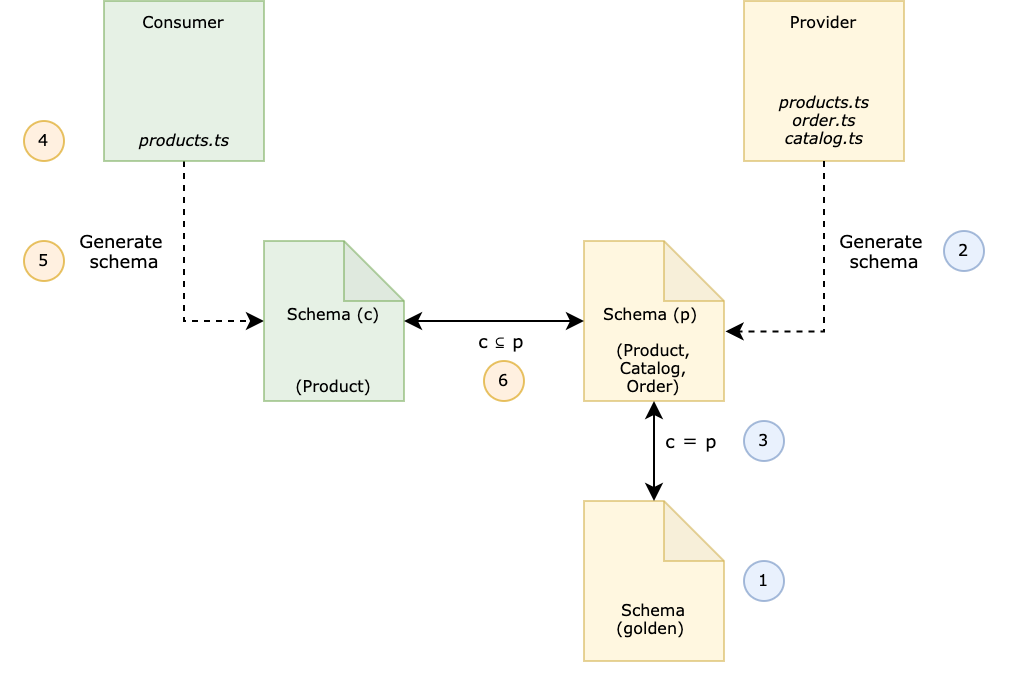

The general process looks like this:

- Somebody (probably the Provider) generates a schema for the interface by hand

- Provider generates a schema from the code

- Provider compares the output schema to the desired one. The full schema must match, otherwise the build fails.

- Consumer generates a schema from their code

- Consumer compares the output schema to the desired one. If it isn't a subset of the real schema, the build fails (note: we've omitted how the schema is shared to the consumer[s])

Example:

Using a tool such as https://github.com/YousefED/typescript-json-schema, you can generate a schema from your code - on either the consumer or provider. For example the following declared type:

export interface Product {

/**

* The price of the product

*

* @minimum 0

* @TJS-type integer

*/

price: number;

/**

* The name of the product

*

* @TJS-type string

*/

name: string;

}will be translated to:

{

"$ref": "#/definitions/Product",

"$schema": "http://json-schema.org/draft-07/schema#",

"definitions": {

"Product": {

"properties": {

"price": {

"description": "The price of the product",

"minimum": 0,

"type": "integer"

}

"name": {

"description": "The name of the product",

"type": "string"

}

},

"type": "object"

}

}

}Having generated the schemas from your code, you can then use tools like https://www.npmjs.com/package/json-schema-diff to compare schemas.

This approach suffers from all of the downsides mentioned above, but is super quick to do and is quite low maintenance. Aside from having a means to share and agree on the current schema, there is no real dependencies between teams.

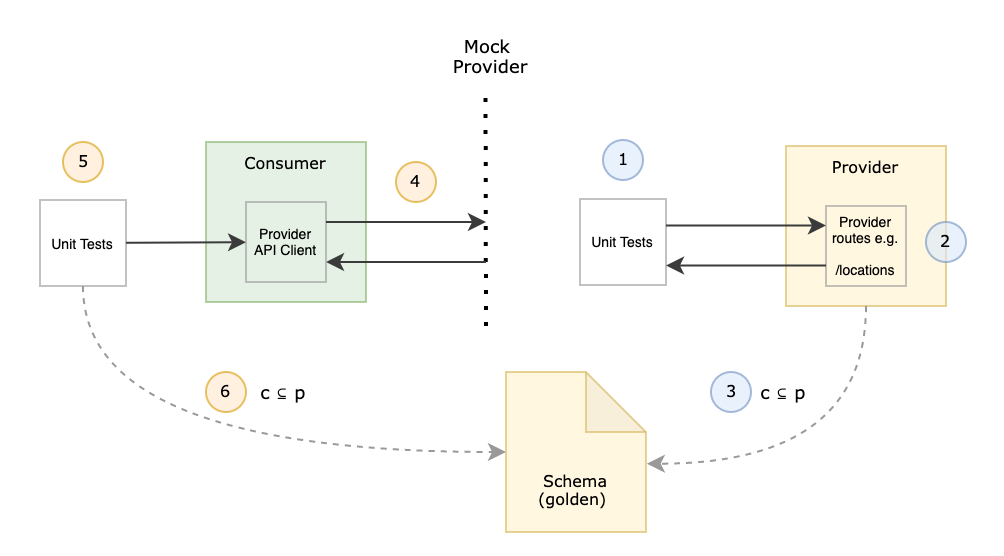

2. Code-based schema test

The next approach is to integrate schema testing into your unit testing framework. The steps are the same as above, except that (2) and (4) are replaced by unit tests on either side of the interaction. There are many ways that this can be achieved, but the key difference in this type of testing is that you must invoke your code as part of the test and cross reference the input/output from that test against the shared schema.

We'll use a fictional NodeJS project and https://github.com/americanexpress/jest-json-schema library to illustrate the point.

Provider Test

For example, given the above products.json schema file and an API written in NodeJS, you could write a test for the /products/:id API resource as follows:

it('GET /products/:id route matches the schema', async () => {

// arrange - import external products schema

const schema = require('./schemas/products.js')

// act - test the route directly (don't start the actual server)

const res = getProductsRoute(1);

// assert - check that your stubs match the schema

expect(response).toMatchSchema(schema);

expect(response.status).eq(200)

});This is nice, because you don't need to start the actual server which makes it fast and flexible for things like stubbing, and because it is actually executing your code it will give you increased confidence around behaviour that using a type definition alone can't provide. It still suffers most of the above issues, and without combining with other strategies (for example schema projection) you'll need to manually confirm that you have sufficient API coverage (5).

Consumer Test

On the consumer side, you need to do a bit more work to set things up, but you would normally create a separate set of fixtures that contain all of the schemas and request/response mappings.

For example, if your consumer was a NodeJS API you could use https://github.com/americanexpress/jest-json-schema to check your interactions as follows:

it('matches the JSON schema', async () => {

// import external products schema

const schema = require('./schemas/products.js')

const response = { latitude: 42, longitude: 13 }

// arrange - mock out the backend API

const scope = nock('https://api.myprovider.com')

.get('/products/1')

.reply(200, response)

// act - call your API client code

const response = await productsClient.getProduct(1)

// assert - check that your stubs match the schema

expect(response).toMatchSchema(schema);

// ... other checks for the behaviour of your productsClient

});Note that on both sides of the contract, you need to explicitly map which HTTP interactions map to which schema (or definition within a schema). This may be something the provider team can provide as part of their suite and share with the consumer team if a format can be agreed on.

For a list of helpful tools in this space, take a look at this great post from APIs you won't hate.

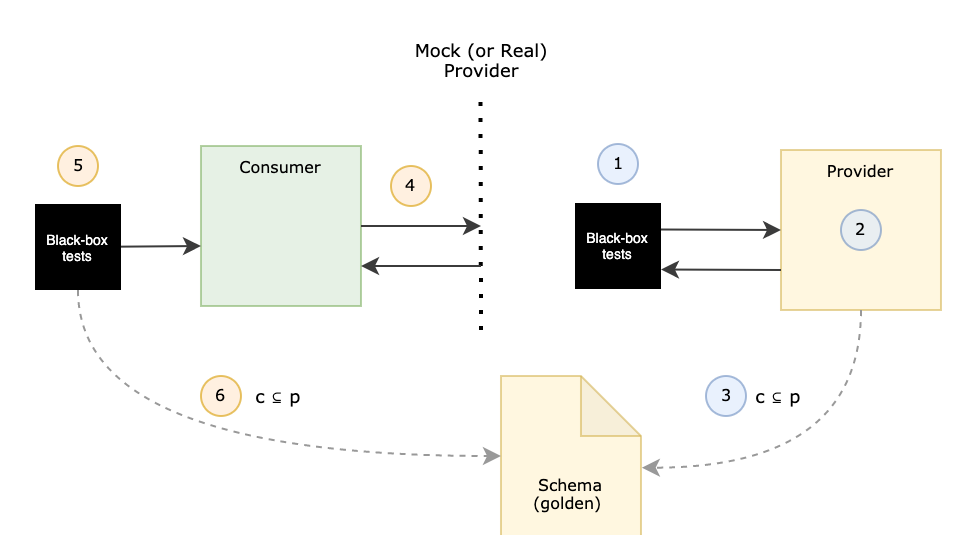

3. Blacks-box / integrated tests with recording

The last option is to run black-box or end-to-end (e2e) style tests from outside of the codebase, record the request and response information and confirm that they correspond to their respective schemas. This is possible to do from the consumer side but it's more difficult to do because you need to establish a mock back-end (or use a real one) and ensure all requests between your component and the provider API are proxied, as well as setup any other state your component needs to be able to call the external system. I've been told by an ex-Googler that they use the Martian proxy to record/replay requests, combined with an e2e suite and use an approval-based testing approach to great avail.

Depending on how many scenarios are run will give you more or less confidence, roughly in line with what you'd expect for general integration test coverage. There are a myriad of tools that support this sort of testing - basically anything that can issue an HTTP request is a candidate. For example Postman, Prism (for OpenAPIs) or in this case we're just writing a basic NodeJS test that issues a call to the real API.

Consumer

In this example, we use Talkback (a VCR clone for NodeJS) to stand up a proxy server and record all of the interactions taking place between them.

// (1) start the talkback server (recording proxy)

beforeAll(() => {

server = talkback({

host: "http://localhost:3000",

record: talkback.Options.RecordMode.NEW,

port: 8000,

path: "./tapes",

silent: true

})

server.start()

})

// (2) run a series of tests that execute your consumer code

// and compare to a fixture containing mappings of requests/

// this can be done in any way that

describe('GET /products/:id', () => {

it('matches the schema', async () => {

// act - call the Products API

const res = await getProduct(1)

// Assert - Compare tapes to schemas

const tape = fs.readFileSync('./tapes/<interaction 1>.json5')

const body = JSON5.parse(tape).res.body

expect(body).toMatchSchema(schema);

});

// ...

})

// (3) shutdown recording proxy

afterAll(() => server.close())This would require diligence in ensuring the schemas are up to date with what the provider offers, adding new endpoints as new consumer functionality is created and maintenance of a fixture maintaining a mapping of code and requests to the appropriate schema.

Because the interactions are recorded, it does offer a way of capturing the consumed API surface area of a provider (7). Once recorded, you also have a fast and reliable provider stub service, because once talkback has seen a request, it saves the response and stubs out future ones (this behaviour is configurable) - and if you continually validate these stubs against the current schema you can have some confidence your code will remain compatible.

Another possibility here is to have the provider run these tests and share the tapes with the consumer team (this is essentially the Pact workflow but in reverse).

Provider

The provider side can be easier, because the only requirement is to be able to start the provider. You do need to be able to stub out other third party systems to avoid this turning into an end-to-end integration test.

it('GET /products/:id matches the schema', async () => {

// import external products schema

const schema = require('./schemas/products.js')

// act - call the actual running server from the outside

const res = await axios.get('http://localhost:8000/products/1')

// assert - check that your stubs match the schema

expect(response).toMatchSchema(schema);

expect(response.status).eq(200)

});This has the benefit of being able to retrofit contract-testing to existing projects without having to write unit tests, which may not be easily achieved depending on the state of a code base.

Next Time

In the final instalment, we'll demonstrate how you can use Pactflow to manage a schema-based contract testing workflow and talk about how we might be able to incorporate these approaches into our tooling in the future to support a wider range of testing.